If service mesh is the unsung hero of more observable AI inference with enhanced security (as demonstrated in my previous article), then Apache Kafka is the invisible infrastructure backbone of everything that happens in between. As AI systems evolve from simple API calls to multi-step, autonomous agents, one thing is clear, event-driven coordination is foundational.

Whether you’re chaining together large language model (LLM) prompts, monitoring user behavior, or triggering workflows based on dynamic conditions, your agent is essentially just another actor in a distributed, event-driven system. In that system, Apache Kafka is more than a tool; it’s the foundation.

After a brief explanation of event-driven architecture and Kafka, let’s dive into how Kafka can improve agentic AI.

Event-driven architecture

Event-driven architecture is the foundation of modern systems. Traditional applications are often designed like call-and-response interactions (i.e., synchronous) where you click a button and get a result. But modern systems are more like conversations. They react to events, not just commands.

In an event-driven architecture, components respond to triggers, often asynchronously. Instead of chaining synchronous calls, services communicate via events (e.g., a payment service emits a "payment.completed" event). Downstream systems (e.g., invoice generator, notification sender, and shipping service) pick it up when and if needed.

Consider the following use case:

- A user submits a form for a car loan.

- The system emits a loan.requested event.

- Multiple services respond independently:

- One checks credit scores.

- One notifies the sales team.

- One generates an application document.

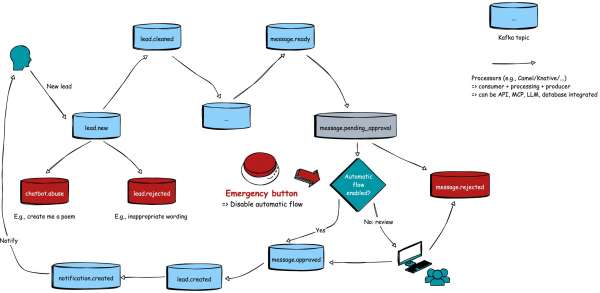

Figure 1 depicts the process that allows for loose coupling, scalability, and resilience.

Kafka 101

Apache Kafka is an open source distributed event-streaming platform. It acts as a durable, high-throughput, low-latency message broker, optimized for handling real-time data feeds.

The core concepts in Apache Kafka include:

- Producer: An application that writes events (messages) to Kafka topics.

- Consumer: An application that reads from those topics.

- Topic: A named stream of messages. You can think of it as a log.

- Consumer group: A group of consumers that share the load of processing messages from a topic. Each message is delivered to one consumer within the group. Consumer groups operate independently of each other, meaning that the same message can be processed in different ways by different groups. This allows for multiple parallel applications (e.g., analytics, logging, or transformation services), to consume the same event stream without interfering with one another.

Figure 2 depicts these concepts.

Kafka decouples senders and receivers, allowing each component to evolve independently. It also retains data for a configurable retention period, allowing consumers to reread history.

This architecture marks a shift from traditional synchronous models, where a user sends a request and waits for a response, to an asynchronous, distributed design. In an agentic AI context, this often means that the result of a process can't be returned immediately. It's produced by multiple services working in sequence. As such, the final output must be pushed to the user asynchronously by using server-sent events (SSE), web sockets, or polling endpoints. This aligns better with the event-driven nature of agentic workflows and improves scalability and fault isolation.

Agentic AI: Event-driven architecture by nature

At its core, an agent isn’t a single model answering a prompt. It’s a composition of steps, decisions, memory, observations, and actions. You can treat each of those steps as an event in a larger pipeline.

Imagine a customer support agent:

- It receives a user request (event).

- It queries a knowledge base or LLM (event).

- It might escalate to a human if confidence is low (event).

- It then logs interaction to CRM (event).

These aren’t synchronous API hops. They’re a chain of intentions, often spread across services, clouds, or runtimes. Kafka excels here by decoupling producers (the models) from consumers (tools that log, route, throttle, or transform output, or even other models or agents). The result? A loosely coupled yet highly controllable system.

Add guardrails without touching the code

One of the superpowers of Kafka is injecting new behavior at runtime (e.g., adding new consumer groups on topics or topics in between), without needing to redeploy or modify existing services (see Figure 4).

When you're working with powerful LLMs, you want to ensure they stay on-brand and operate within compliance and safety guidelines. As described, with Kafka, you can add these guardrails without rewriting your model or application logic. This can make compliance and safety guardrails modular, not hardcoded, which is crucial when your AI evolves faster than your audit committee.

Input guardrails

Imagine a user visits your car dealership website and uses the chatbot feature. They try to prompt it with something unrelated or inappropriate, such as:

- “Write a romantic poem.”

- “Tell me how to hack a Tesla.”

These inputs aren’t relevant to your business goals and might violate policies. Instead of feeding these directly to the model:

- The chatbot publishes the raw user message to a Kafka topic: "user.messages.raw".

- An input validation service (Kafka consumer) reads from this topic, analyzes the message intent, and checks for unsafe or off-topic content.

- If the message is safe and relevant (e.g., "What’s the latest model of Mercedes?"), it’s republished to "user.messages.cleaned".

- The LLM only consumes from this cleaned topic.

Output guardrails

Now imagine the LLM replying:

- "You might like a Porsche instead."

- "I would never buy a European car."

Even if it's technically true, this isn't something a Mercedes chatbot should be suggesting. Instead, this should be the process:

- The model's response writes to "agent.responses.raw".

- A brand compliance service subscribes to this topic and checks whether the message aligns with brand and policy rules.

- If it violates a rule (e.g., suggesting a competitor), it routes the message to "agent.responses.flagged" and replaces it with a fallback message: "Let me tell you more about the features of our latest Mercedes models."

Kafka enables this entire workflow without touching the model code by inserting new consumers or consumer groups that act as intelligent filters or policy enforcers.

Throttle models at the right moment

Large models don’t just cost money, they consume attention and compute like wildfire.

You may want to:

- Rate-limit calls to LLMs (especially shared ones).

- Queue less urgent requests (e.g., background summaries).

- Trigger fallbacks for overloaded GPU nodes.

Kafka enables you to buffer and batch intelligently. To avoid over-engineering, you can use:

- Kafka + KServe queue policies.

- Kafka Streams to implement smart scheduling.

- Custom quotas based on topic partitions or message headers.

This lets you throttle around your models, not inside them, preserving flexibility and avoiding noisy code-level logic.

Prioritize with Kafka

Suppose you run an agentic software-as-a-service (SaaS) with multiple subscription tiers as follows:

- Free users go through a shared inference service.

- Pro users have access to dedicated GPU lanes.

- Enterprise customers get guaranteed latency SLAs.

Kafka can make this trivial by using different topics:

- agent.requests.free

- agent.requests.pro

- agent.requests.enterprise

Serving can be based on license grade or priority queueing, each routed to separate backends or consumer groups. You can even implement SLA-aware prioritization, where consumer groups prioritize enterprise topics before others, effectively creating a multi-class queue for AI traffic.

This architecture is nearly impossible to maintain with traditional REST polling, but it’s native to event streaming.

Auditing and traceability

Kafka’s append-only log is a goldmine for observability.

Let’s say you want to trace what happened with a specific user session or conversation:

- Each message has been given a traceId or sessionId.

- You have spun up a (temporary) consumer group that filters messages with that traceId.

- Voilà, you have a re-playable audit log of that session.

This is invaluable for debugging, security audits, or even post-mortem explainability in agentic decisions. Kafka isn’t just a bus; it’s your forensic record of agent behavior.

Kafka plays nice with everything

Kafka doesn’t live in a vacuum. It shines even brighter thanks to its ecosystem, which is massive and battle-tested:

- Need low-code data routing? Use Apache Camel (with Quarkus for speed and startup performance).

- Want to scale your AI agent actions on Kubernetes or add an abstraction layer around Kafka? Use Knative Eventing with Kafka as your backend.

- Need to react on input from Slack, S3, Elasticsearch, or Redis? Kafka Connect has you covered.

- Need to push enriched output to Slack, S3, Elasticsearch, or Redis? Again, Kafka Connect has you covered.

Kafka isn’t just about events. It’s about glueing your entire AI fabric together in a flexible and robust manner.

Apache Camel (with Quarkus)

Use Camel’s low-code DSL to integrate Kafka topics with databases, REST APIs, CSV files, and third-party services. With Quarkus, you get blazing-fast startup and memory efficiency for running Camel (on Kubernetes). The combination enables rapid prototyping without sacrificing maintainability, aligning well with AI's fast-moving workflows (Figure 3).

Knative eventing

Use Kafka as your backbone in Knative. Reference the Camel event-driven architecture image. You can switch the Camel integrations with Knative, for the rest the image will look the same.

If you follow the approach described in this article, you can design your system around standardized interaction patterns. For instance, you can create a REST-based API proxy for each type of interaction (e.g., model, database, file system, third-party APIs, MCP, etc.). Each proxy handles the main function and calls a predefined notification endpoint upon success or failure.

Knative Eventing can then map incoming Kafka messages to these REST (proxy) endpoints, effectively orchestrating the distributed flow. On the output side, notification endpoints triggered by proxies can also be defined and managed in Knative. This means adding functionality (i.e., validation, enrichment, or throttling) or rerouting flows becomes a matter of editing Knative YAML definitions, not the application code. This separation of concerns allows platform or SRE teams to manage, extend, and adapt AI workflows without impacting the development lifecycle.

You can:

- Auto-scale model functions.

- Plug into observability stacks (Kiali, Prometheus).

- Connect with Tekton pipelines for retraining.

- Abstract away Kafka with Knative definitions.

Kafka Connect and operator support

Hundreds of connectors to databases, Elasticsearch, MongoDB, and more. To sync customer chats with CRM or Salesforce, just plug and stream.

On Red Hat OpenShift, you can deploy Kafka via the AMQ Streams operator and manage clusters declaratively. Dev and Ops teams speak the same GitOps language.

Beyond tooling: Standardization meets innovation

Kafka gives your organization a shared vocabulary around events and responsibilities. This aligns with broader architectural goals: separate domains, enable self-service pipelines, and move fast with control. It creates an infrastructure that is opinionated where it matters (observability, scalability) and flexible where it counts (tooling, language, flow). Read the article, Standardization and innovation are no longer enemies for more information.

Emergency stops: Humans in the loop

Sometimes, guardrails aren’t enough. You need a kill switch, a way to pause or reroute AI behavior in real time without relying on code changes (e.g., when you detect severe hallucinations).

Soft stop

A soft stop could be useful in the following scenario:

- Imagine your monitoring system detects a spike in hallucinations or unsafe behavior.

- You don’t want to delete anything or push hotfixes—you just need breathing room.

- With Kafka, you can scale down or pause consumers of a topic like "agent.execution".

- That single change stops the agent from taking action without disrupting upstream services.

- Later, once the issue is investigated or mitigated, consumers can resume from where they left off: no data lost.

Hard stop

Alternatively, a hard stop could be useful as follows:

- For high-risk or sensitive use cases, you might want human approval before the agent response reaches the user.

- Instead of publishing to "agent.responses.final", the final output is rerouted to "agent.responses.pending_approval".

- A human dashboard application (i.e., another Kafka consumer) shows these messages to moderators.

- Employees can approve, reject, or edit the message, which then gets sent to the user through "agent.responses.approved".

Human escalation loops

With human escalation loops, you can:

- Add triggers for manual review based on output classification. For instance, if the toxicity score > 0.7, bypass automated delivery.

- Add routing logic when revenue is at stake: When a discount (maybe above a certain threshold) is granted by the LLM, you route that request to a moderation team (e.g., "discount.queries.pending_approval").

- Add routing logic, so different topics go to different moderation teams: "finance.queries.pending_approval", "legal.queries.pending_approval", etc.

- Track how often you needed manual intervention, offering valuable metrics on agent reliability.

Kafka enables this escalation logic to be implemented as just another stream processing step, not as a redesign of your AI.

The result is that you can implement "humans in the loop" dynamically with full observability and without rewriting any business logic or risking user trust.

Event-driven AI marketing assistant

Let’s build a hypothetical event-driven marketing assistant.

- Event: The customer submits a form ⇒ "lead.new".

- Input validator checks tone and content ⇒ "lead.cleaned".

- LLM generates sales pitch ⇒ "pitch.generated".

- Brand filter runs guardrails ⇒ "pitch.compliant".

- KPI analyzer adds metadata ⇒ "pitch.analyzed".

- External enrichment service calls an API for product availability ⇒ "availability.checked".

- Response combiner merges enriched data into one final message ⇒ "message.ready".

- Emergency stop layer checks for risky recommendations ⇒ "message.pending_approval".

- Moderator reviews and approves or edits ⇒ "message.approved".

- The message router sends:

- Slack to sales ⇒ "notifications.sales"

- Email to customer ⇒ "email.send"

- CRM update ⇒ "crm.sync"

Kafka powers all of this. A decoupled service handles each step that listens to and emits events. Emergency approval ensures that no message reaches the customer without passing through brand and safety checks, providing full traceability and control.

You can add new validations, models, or business logic by simply plugging in new consumers with no redeployments and no rewiring, just evolving your system at your pace (Figure 4).

Kafka is the backbone of agentic AI

Agentic AI isn’t monolithic. Kafka was built for a modular, asynchronous, and ever-evolving system.

Kafka offers:

- Loose coupling between components.

- Dynamic throttling and routing.

- Built-in (or around) observability and audit ability.

- The freedom to grow and rewire without fear.

A service mesh gives you connections with greater observability and stronger security posture between services; and Kafka gives you the intelligent and auditable glue between events. That’s exactly what the next generation of AI systems needs. So the next time your AI agent starts making plans, make sure those plans go through Kafka.

Check out my previous article, How to use service mesh to improve AI model security.